Existential Risk

Existential risks are anything that may cause extinction to the human race.

...

Nuclear War

Nuclear close calls are incidents that could lead to, or could have led to at least one unintended nuclear explosion. These incidents typically involve a perceived imminent threat to a nuclear-armed country which could lead to retaliatory strikes against the perceived aggressor. The damage caused by international nuclear exchange is not necessarily limited to the participating countries, as the hypothesized rapid climate change associated with even small-scale regional nuclear war could threaten food production worldwide—a scenario known as nuclear famine. List of nuclear close calls

Collapse of Human Civilization

Aliens

Superintelligence and Artificial Intelligence

In our world, smart means a 130 IQ and stupid means an 85 IQ—we don’t have a word for an IQ of 12,952. WaitButWhy on the The AI Revolution: The Road to Superintelligence

Biological Hazards, Bioweapons, Famine

Pandemics, Viruses and Disease

Water: From Moster Waves to Water Shortage

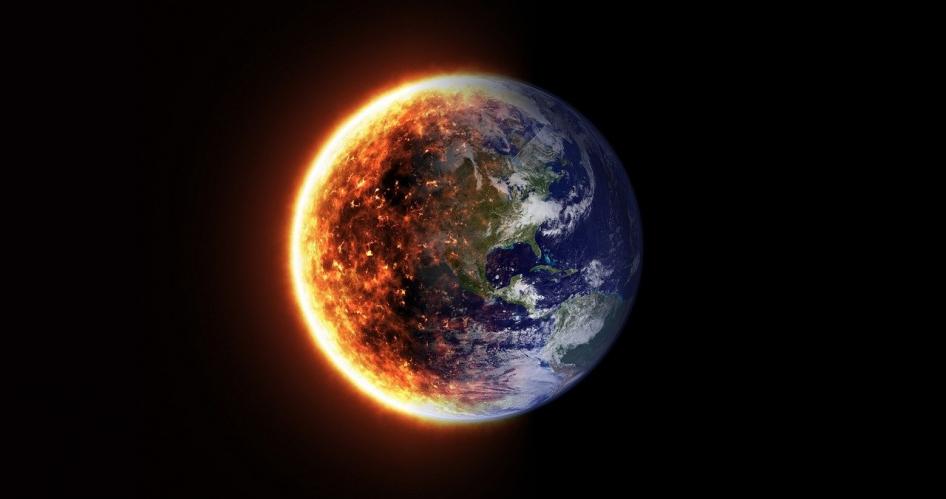

Climate Change, Extreme Weather

Might climate change pose an existential risk? According to 80000hours extreme climate change could increase existential risk if:

- It could make Earth so hot that it becomes uninhabitable for human life;

- It acts as a ‘risk factor’, increasing other existential risks.

Asteroid Impact, Gamma-ray Burst Supernovae, Andromeda Collision, Cosmochalypse

Supervolcanos

Nanotechnology

“Can we survive technology?”

―

Humans Evolving

Existential Risk Consensus

Wikipedia on Global catastrophic risk

A global catastrophic risk is a hypothetical future event which could damage human well-being on a global scale, even endangering or destroying modern civilization. An event that could cause human extinction or permanently and drastically curtail humanity's potential is known as an existential risk.

The dichotomy of existential risk, according to Wikipedia:

- Anthropogenic risk: Artificial intelligence; Biotechnology; Cyberattack; Environmental disaster; Experimental technology accident; Global warming; Mineral resource exhaustion; Nanotechnology; Warfare and mass destruction; World population and agricultural crisis.

- Non-anthropogenic risk: Asteroid impact; Cosmic threats; Extraterrestrial invasion; Pandemic; Natural climate change; Volcanis.

Further Reading on Existential Risk

- 80000 hours on The case for reducing existential risks.

- Nick Bostrom on Analyzing Human Extinction Scenarios and Related Hazards

- The Future of Life Institute is a non-profit focused on maximizing the benefit of technology, especially by mitigating its risks, in four areas of focus